‘Decoding Deception’ provides tools necessary to navigate AI world

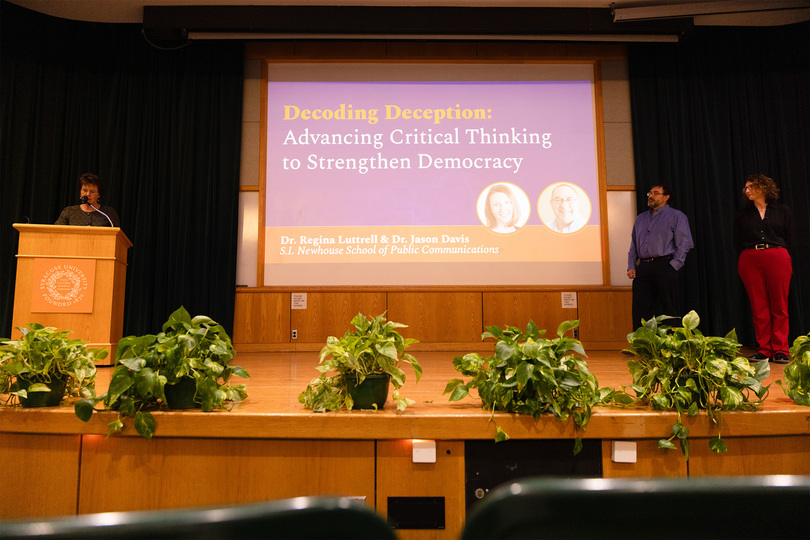

The event was part of Gretchen Ritter's Life Together Series, and provided insight into methodology for AI detection in media. Regina Luttrell and Jason Davis led the discussion and explained the importance of thinking critically about everyday media consumption. Ella Chan | Asst. Photo Editor

Get the latest Syracuse news delivered right to your inbox.

Subscribe to our newsletter here.

Syracuse University’s Regina Luttrell and Jason Davis led “Decoding Deception,” a discussion unpacking the rise of generative artificial intelligence and its responsibility for widespread disinformation, Wednesday evening. The event is the second in SU Vice President for Civic Engagement and Education Gretchen Ritter’s Life Together Series.

Ritter said the lecture provides valuable insight into methodology for AI detection and management in media. After the discussion, she called on the university to continue offering the series going forward.

“For people to be well-informed citizens, they need to be able to understand whether or not this information that they’re receiving is reliable,” Ritter said. “We’re living in a moment where we know that there’s a lot of unreliable information out there, and it’s one of the things that tends to feed political polarization, which is, in a democratic society, very problematic.”

She also said there is a “real need” for the program given the increasing popularity of generative AI.

Luttrell, an associate professor of public relations, and Davis, a research professor, work as co-directors of the S.I. Newhouse School of Public Communications’ Emerging Insights Lab, which observes and analyzes the presence of AI within media. Their research served as the basis of the presentation, challenging audience members to think critically about AI while remaining mindful of their own media consumption.

Luttrell said she’s noticed a shift in her students’ attitudes toward AI, as they were initially hesitant to use it in the classroom. She and Davis both agreed that the integration of AI within society, and academia in particular, is one of the “fastest-moving” advancements they’ve ever seen.

“It reminds me a lot of when social media came out and social media was part of the classroom and now there isn’t a class that doesn’t incorporate some form of social media,” Luttrell said. “I think there’s always a phase of adoption. You have the really early adopters, and then you have those that are going to wait it out. I think some faculty are just at the end of waiting.”

A central focus of Luttrell and Davis’ research is the difficulty of recognizing AI use. To demonstrate, they asked attendees to tell the difference between a series of AI-generated images and authentic photographs, along with a poem written by a well-known poet, compared to an AI version where the software was asked to mimic the writing style and cadences of the original author.

Supported by their previous research, Luttrell and Davis found that the untrained human eye has about a 50% success rate in identifying images or text modified by AI. An AI computer software, such as the one at Emerging Insights Lab, has approximately a 95% success rate of correctly detecting altered media.

Ella Chan | Asst. Photo Editor

Ritter said the lecture provided valuable insight into detecting AI in media called on the university to continue offering the series in the future.

Ananya Das, a former BBC journalist and current SU master’s student, said in India, the overwhelming presence of misinformation and disinformation “changed the conversation” about media.

Das, who lived in India before coming to SU to study international relations, said she believes the rest of the world should focus on misinformation, especially considering a lack of global AI regulation.

“(AI) is getting increasingly more sophisticated in terms of technology, but I would still like to believe that human beings are smarter than AI,” Das said. “I think this kind of technology in the wrong hands is also very concerning.”

Luttrell and Davis encouraged users to instead adapt to using AI themselves and looking critically at everything in the media.

To increase AI detection, Luttrell and Davis recommend that people make an effort to educate themselves on where AI content may appear.

“Trust in media over the last several years has really deteriorated, and so I think sometimes we don’t actually stop and fact check ourselves, because we just automatically assume if something is part of that little filter bubble that we’re in,” Luttrell said.

Newhouse Dean Mark Lodato attended the event and echoed Ritter’s sentiment, saying the school will continue to support similar efforts to minimize the impacts of misinformation and disinformation and pointed to the importance of “informed communication” in today’s society.

Despite conversations regarding the harm of AI use, Davis emphasized how crucial it has become in the new media age.

“Generative AI is going to accelerate all the people who have it and have it as a tool, compared to people who are going to be unable to access it, and therefore becoming both a victim of not having that skill set and a victim of not being able to sort of tell when it’s being used against them,” Davis said.

Davis referred to this as the “digital divide,” highlighting an accessibility gap between those able to familiarize themselves with AI and those who remain in the dark about its presence due to a lack of resources.

Luttrell added that while AI began as a free tool, the divide Davis referred to will only grow as paywalls begin to limit its access.

“That last mile with AI is something that people continually don’t want to talk about, because it’s the expensive mile, but it’s the one that matters,” Davis said. “And it’s not solved yet, but I hope we get it right this time.”